Hash Global is an Asian-based investment institution focused on digital assets and blockchain ecosystems. Founded in 2018, the company is dedicated to investing in Chinese entrepreneurs working on early-stage blockchain projects combining Web2 and Web3 technologies around the globe. Hash Global is also an early supporter of Moonshot Commons and has invested in numerous other top projects, including but not limited to RSS3, EthSign, Mask Network, SeeDao, imToken, Infinity Stone, Debank, Matrixport, Hashquark, and Meson Network. In Moonshot Original #1, KK, Founder of Hash Global, draws insights from Dr. Lu Qi and Dr. Zhang Hongjiang and shares his thoughts on Large Language Model (LLM) and its application in ChatGPT. The rapid growth of AI will drastically raise our demand for Web3 technologies.

KK: I have compiled some thoughts to share with Web3 professionals:

1. If you spend enough time with the family pet, you will realize that a cat is a Large Language Model (LLM) or a combination of models that are just a few dimensions simpler than you.

2. The evolution of the LLM will be similar to the evolution of genes and life. The emergence of the transformer model architecture is like the first unintentional building of a molecule into a replicable RNA. Chat GPT-1, 2, 3, 3.5, 4, and the evolution of the following models are like an outburst of life. Without surprises, the development will be faster and faster, eventually getting out of control. The model itself has an ever-increasing complexity. The law of the universe gives the model the internal drive to keep getting more complicated.

3. More than thirty years ago, Steven Pinker discovered that language is a human instinct, just as we now realize that the original textual language ability is the source of the generalization ability we struggle to pursue in model training. Why did artificial intelligence and language research fail in the past? Language is an instinct that emerges when the nervous system of the human brain is complex enough. Whether the language evolves into Chinese, English, or bird language is related to the environment and its tribe. The generalized model, along with other models, is superimposed to produce the superb intelligence of humans. The path would be completely reversed if you want to actively design and build an intelligent agent or AGI. It may not be impossible, but it is 100 million times more difficult.

4. If a higher-dimensional intelligence or god exists above our universe, it should be surprised when it sees the Cambrian Explosion on Earth, just like we see ChatGPT today. I can’t fully explain it; I can only slowly experience, learn, and try to understand. 5. AGI can be broken down into reasoning, planning, solving problems, thinking abstractly, comprehending complex ideas, and learning. GPT4 is capable of these functions except for the planning and only half of the learning (because it is based on a pre-trained model and cannot learn in real-time).

6. The average learning capacity of the human brain evolves slowly. Still, the development of silicon-based intelligence can be exponential once it goes on the right path (look at the difference between GPT4 and GPT3.5).

7. Large Language Model = Big Data + Big Computing Power + Strong Algorithm. Only the U.S. and China are capable of building LLM. The difficulty of building big models is the chip, CUDA (a programming platform for GPU) developer accumulation, engineering construction, and high-quality data (for training, tuning, and alignment). Alignment has two aspects: one is to align the model and representation of the human brain, and the other is to align the ethical standards and interests of humans. There are great opportunities in at least two vertical model tracks in China: healthcare and education.

8. GPT4 has weaknesses and shortcomings, but like the human brain, it can be stronger once given more precise instructions or cues. It can also be closer to perfect when other aids are invoked. Similar to the human brain, it needs to use tools such as calculators to accomplish tasks that the human brain needs to improve.

9. The number of parameters of the LLM should be compared to the number of synapses (not neurons) of human brain neurons, which is 100 trillion. The number of parameters for GPT4 has yet to be announced, but it is estimated that the number of parameters for the LLM will be approached soon.

10. The current hallucination rate of GPT4 is about 10%-14% and it must be brought down. Hallucination rate is an inevitable feature of human-like models. This rate is still too high compared to that of a human. Whether or not it can be effectively reduced will determine whether AGI development will continue its upward trajectory or enter a phased downturn in a few years.

11. Personally speaking, the biggest significance of ChatGPT lies in that it undeniably proves it is possible to generate complex enough thinking patterns based on simple computational nodes and functions with a large enough number of models. This system is finite, not infinite. It may not be the soul behind human language and the thinking that drives it, but something that emerges from 100 trillion synaptic connections constantly tuned by the evolution of the environment. All of this is very much in line with the rapid progress of human research in the last two centuries on the question of “Where do humans come from?”

12. The chain of evidence from single cells to human formation is complete enough. The existence of genes and motivation for forming complex systems are also well established, but can one design a silicon-based AGI based on all scientific theories? Some people think it will take a few years, others will take a few decades, and more people believe it will never happen (even after seeing AlphaGo’s performance in Go). Yet, ChatGPT gives the most straightforward answer with iron-clad facts. Sam’s team should not think that the human brain is so great to be so determined to take the AGI route with a LLM. Burning $100 million a month is a test of faith.

13. Since the underlying hardware is different, ChatGPT’s strategy is likely to be very different and inefficient compared to the design of the human brain. Surprisingly, ChatGPT produces results that are so much like human thinking. Simple rules may essentially drive human thought.

14. The rules of language and thinking may be something other than what we can summarize precisely according to grammar. This rule is currently seen as implicit and cannot be fully parsed and translated. Even if a creator existed, they probably left the universe alone. How else could there be so many bugs and flaws?

15. I admire Steven Pinker, who could demonstrate compellingly decades ago that language is instinctive to all humans and is engrained in our genes, using only observation and reasoning. I don’t know if Sam has read the book The Language Instinct, but he demonstrates that artificial networks like ChatGPT can create language very well. Language instinct and logical thinking are not as complicated as one might think, and ChatGPT has silently discovered the logic behind language. The Language will also be the instinct that distinguishes all silicon-based AGIs from other silicon-based calculators and AI.

16. The human brain and the carbon-based brain like to generalize and refine (probably due to brutal evolution), so they are highly efficient in energy use. Still, they could be better at basic computation and processing. We know that many computational models may only be done one step at a time. The GPT4 architecture is only optimal with much generalization and simplification, so it consumes much energy. However, a global consensus is that “this is the way to go.” We should see multiple teams in the U.S. and China accelerating in various aspects: chip computing power, data quality, algorithm optimization, and engineering architecture.

17. The value assessment system of the human brain should be the DNA and genes formed by carbon-based molecules in order to maximize their replication probability, set the weights to the synapses of neurons through the power of natural evolution, and gradually evolve to be established. The model supported by this carbon-based computational node could be better and is developing slowly. The weights and algorithms must be adjusted more efficiently to adapt to environmental changes. That is why we have the desires and sufferings mentioned by various religions.

18. The book Why Buddism is True has at least seven modules in the human brain (it should be a large multimodal parallel model). Which thinking module occupies the main body of the present moment? How a person’s decision is made is determined by the feeling. This feeling is determined by the obsolete value assessment system brought by the evolution of humans (one of the carriers may be gut bacteria). I suggest you read chapters 6, 7, and 9 of the book I wrote a few years ago.

19. Imagine if humans created silicon-based AGI and robots. What is the value evaluation system that drives the robot brain? Would robots be confused about “where I come from and where I am going”? Humans have Siddhartha, and why can’t robots have it? What would a robot awaken to? Will some robots write a book, Why Machinism is True, one day to call for robot enlightenment, for robots to enter Nirvana, and to escape the reincarnation humans have set for them?

20. Energy constraints will limit the model evolution, but silicon-based AGI’s future energy consumption mode should be much more efficient than today. After all, the way the carbon-based human brain has iteratively evolved for a billion years to get to the energy efficiency of a crow’s brain. The energy consumption of future silicon-based AGI may be hundreds of millions of times or even higher orders of magnitude than the energy humans can use now. Still, the computations that can be processed and the things that can be accomplished will also be hundreds of millions of times more incredible. It is not certain that controlled fusion technology will be at hand. In that case, there might be enough energy on Earth, not to mention the solar system, the galaxy, and the broader universe. ChatGPT and AGI are ground-breaking. We are fortunate to live in an age where we can certainly figure out where humans come from and where we are going.

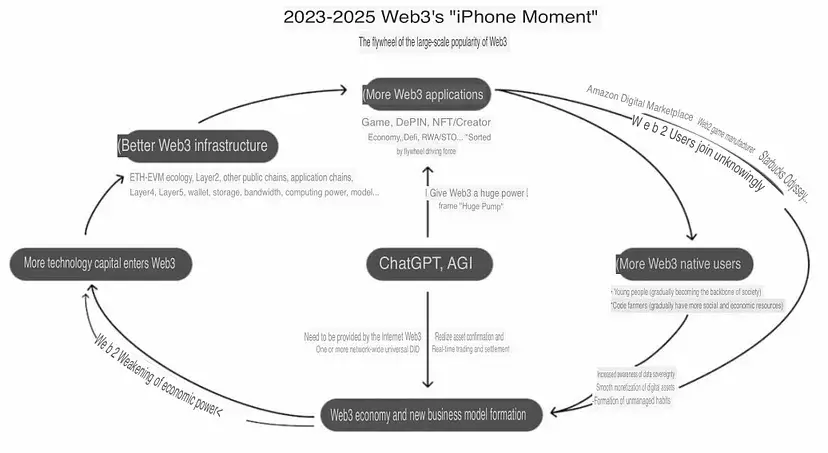

The rapid development of AI will significantly contribute to our demand for Web3 technologies — how to validate the rights of content creation; how to establish the identity of people (Sam is working on Worldcoin); whether Prompt and open source code can be made into NFT for licensing. What is the purpose of being so productive if the value cannot flow freely on the Internet? Can you imagine all the content subscriptions and the banking system to complete the transfer and cross-border transfer from the Internet to Web3? Can you open a bank account for an IoT device? Can you transfer 0.01 cents to 10,000 users at the same time? I said last time that the next three years would be the iPhone Moment for Web3, and the number of Web3 users will exceed 100 million, or even far more, in three years.

Checkout the following flywheel graphic for reference:

I have always enjoyed reading books on life sciences, complex systems, and Buddhism. I want to recommend a couple to everyone to read in this order: Vital Dust, Wonderful Life, The Selfish Gene, The Evolution of Everything, The Social Conquest of Earth, The Language Instinct, Deep Simplicity, Out of Control, and Why Buddism is True. If these authors are still alive and able to write, they should look at the future of GPT and write new editions of their books.

Life is too short, and many great ideas are sadly lost forever in the annals of history. Books, music, and film should only be a tiny part of the record. Even with so many great writings recorded, how much can one read? This is not a problem at all with Silicon AGI.

Perhaps it’s time to find the dialogue between Morpheus and Neo in the movie “The Matrix” and reread it.